Using Python with a proxy server offers several advantages in various scenarios. Here are some benefits of incorporating proxies into your Python web scraping or web crawling workflows:

- Enhanced Privacy: Proxies can provide enhanced privacy by masking your IP address, making it difficult for websites to track your activities.

- Access to Geo-Restricted Content: Proxies enable you to access content that might be restricted to specific geographic regions by routing your requests through servers located in those regions.

- IP Rotation: Proxies allow you to rotate IP addresses, helping you avoid IP-based rate limits or blocking from websites.

- Simultaneous Scraping: With proxies, you can scrape multiple websites simultaneously without triggering blocks or restrictions by distributing your requests across different IP addresses.

- Scalability: Proxies provide a scalable solution for web scraping, allowing you to handle larger volumes of data by adding more proxy servers.

- Improved Performance: Proxies can reduce latency and improve speed by selecting servers closer to the target website’s location.

- Firewall and Filter Bypass: Proxies help you bypass firewalls, content filters, or network restrictions, enabling access to blocked or restricted websites.

- Security: Proxies add an extra layer of security by intercepting requests and responses, helping identify potential threats before reaching your system.

- A/B Testing and Data Analysis: Proxies allow you to simulate requests from different user segments, locations, or user agents, facilitating A/B testing and diverse data collection for analysis.

By utilizing proxies in your Python web scraping workflows, you can enhance privacy, access restricted content, rotate IP addresses, achieve scalability, optimize performance, bypass filters, ensure security, and conduct meaningful data analysis.

To implement proxy usage in Python, you can use the requests library along with the proxies parameter. Here’s an example of how you can incorporate proxies into your code:

import requests

# URL to scrape

url = 'https://www.example.com' # Replace with the desired website URL

# Proxy configuration

proxy = 'http://username:password@proxy_ip:proxy_port' # Replace with your proxy details

proxies = {

'http': proxy,

'https': proxy

}

# Send a GET request using the proxy

response = requests.get(url, proxies=proxies)

# Check if the request was successful

if response.status_code == 200:

# Process the response content

print(response.text)

else:

print('Request failed with status code:', response.status_code)In the code above, you need to replace ‘http://username:password@proxy_ip:proxy_port’ with your actual proxy details. For scraping we recommend our residential proxies as their rotation setup is suitable:

The residential network has two options:

1) Request-based rotation – your IP will rotate on each request of your tool or browser (such as page refresh)

2) Sticky IP sessions – you’ll get a sticky IP that can be used from a few minutes up to one hour, which you can change on demand sooner than its expiration time.

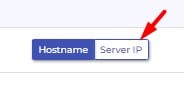

The format of our residential proxies is: username:password@piratproxy.com:6700 . Instead of the hostname piratproxy.com you can also use the numeric form of the server by accessing your dashboard->manage access->server IP

If your proxy does not require authentication, you can remove the username:password@ part from the URL. The proxies parameter is a dictionary that specifies the proxies for HTTP and HTTPS requests. In this example, the same proxy is used for both HTTP and HTTPS requests. If your proxy configuration differs for HTTP and HTTPS, you can modify the proxies dictionary accordingly. By using the proxies parameter in the requests.get() method, the request will be made through the specified proxy. You can then process the response as needed.